Naive Bayes

Today I want to talk a bit about Naive Bayes Machine Learning Algorithm, it's logic, usage area, advantages and disadvantages, those are the topics which this article covers. Naive Bayes Machine Learning Algorithm is one of the most popular and widely used machine learning algorithms. It is straightforward and powerful and also it is the algorithm which is suggested to try even if you have millions of the records with their attributes, it is kind of classifier which is one of the members of Supervised Learning Algorithms.

This algorithm is called naive Bayes or idiot Bayes as well. The reason for calling this algorithm like that is the simple calculation of the probabilities. The algorithm works by assuming that given data is conditionally independent and this assumption may not fit in real data, but somehow this approach performs surprisingly well on the data even in case of not fitting.

Some of the real world usage of the Naive Bayes Algorithm

Text classification, spam filtering. recommendation systems, etc.

Advantages

It is the fast and highly scalable algorithm.

It is a simple algorithm which depends on computing probabilities.

It can be easily fed by the small dataset.

Good choice for Text Classifications problems.

Disadvantages

It cannot learn the relationship between features.

The strong assumption of independence class, it is almost impossible to find such data in real life.

To be able to understand this algorithm firstly we need to understand Bayes theorem, so here the brief explanation of the Bayes theorem;

Bayes Theorem

Bayes theorem named after Reverend Thomas Bayes (1701-1761), it is based on conditional probability, it means the probability that something will happen depend on something else has already happened. By using this theorem, we can calculate the probability of an event by using its prior knowledge.

Here is the formula for Calculating the Conditional Probability

P(T) is the probability of thesis is true, it is also known as the prior probability.

P(E) is the probability of evidence.

P(E|T) is the probability of the evidence when thesis is true

P(T|E) is the probability of the thesis when evidence is exist

After brief information about Bayes Theorem and it's logic, let's continue with Naive Bayes Algorithm.

Naive Bayes Algorithm Example

So now, it is time to do an example to understand how we can apply this algorithm to problem-solving.

Let's assume that we want to predict user comment if it is positive or negative, to be able to do this, we need to feed our algorithm with some knowledge which it can be used to make predictions later.

Let's start with a dataset which we can use for this purpose. You can download Dataset from this link.

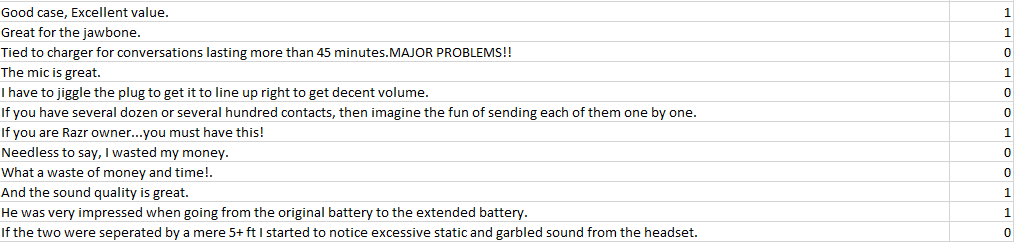

As you can see below, the dataset contains User Comment and Class, '1' means positive and '0' means negative comments.

Before jumping in writing code, we need to understand that if we want to use this algorithm in production, we will need much more data than this dataset contains.

This dataset is an example to show how the algorithm works by looking prior knowledge for making predictions, it is not for production usage.

To be able to make predictions about the type of the user comments, we need to make some calculations for probabilities of the words according to their usage in the type of the comment. A different way to say, we need to calculate all words' probabilities according to the type of comments (positive or negative) which they used.

Let's Write Some Code

To be able to work with comments, we define a model class which we use for defining sentences in each comment, or in our case we can say that each sentence is the comment, so we need to model class which we can define our training comments.

Sentence Model

public class SentenceModel

{

public SentenceModel(string text)

{

Text = text;

}

public SentenceModel(string text, double category)

{

Text = text;

Category = category;

}

public string Text { get; private set; }

public double Category { get; private set; }

}

We can write a helper function for loading all sentences from the file into our list of sentences and then we can define a list of the SentenceModel which we can keep all comments and their type and use for feeding our algorithm.

Load Data Function

///

/// Loads data from the file

///

/// Filename

/// Name of the sheet

/// Columns which needs to fetch,if it is null all columns will be fetched

public DataSet LoadData(string fileName, string sheet, string[] columns = null)

{

if (!File.Exists(fileName))

throw new FileNotFoundException();

var connStr =

string.Format(

"Provider=Microsoft.ACE.OLEDB.12.0;Data Source={0};Extended Properties=\"Excel 12.0 Xml;HDR=YES;IMEX=1\";",

fileName);

//Create dataset

var dataSet = new DataSet();

dataSet.Tables.Add(new DataTable(sheet));

var table = dataSet.Tables[0];

if (columns != null)

for (var i = 0; i <= columns.Length - 1; i++)

table.Columns.Add(columns[i]);

var items = new List

It is simple function which we read rows and columns from the file and return them as DataSet

Now we can use this function to load our dataset to our SentenceModel List.

//Loads dataset from the dataset file

var data = LoadData("Filename of the dataset file", "Book1", new[] { "Text", "Category" });

//Define list model for stencemodel

var sentences = new List();

//Insert all comments to the list

foreach (DataRow row in data.Tables[0].Rows)

{

var sentences = new SentenceModel(row["Text"].ToString(), Convert.ToDouble(row["Category"].ToString()));

sentences.Add(sentences);

}

As we read all comments from dataset file and insert them into our list of sentence model, now we need to do two more additional processes before starting to feed the algorithm with the data.

The First process is filtering dataset for cleaning the words which we don't need to use while deciding if the comment is positive or negative, because of this, words such "the","he","she","to" etc need to be filtered.

The second process is preparing Bag of Words, but for our Bag of Words, we don't need to count the frequency of the word in the comments, we need such bag of words for defining unique ids for each of the words which are used in the comments. We need this because of our algorithm works with numeric data, not with the string data.

Let's start by writing our WordBagItem model.The model contains two fields, Word as a string, and Id as an int.

Word Bag Item

public class WordBagItem

{

public WordBagItem(string word, int id)

{

Word = word;

Id = id;

}

public string Word { get; private set; }

public int Id { get; private set; }

}

OK, let's write a function and extension which we can use for the two processes which we need to apply to dataset before feeding to our algorithm.

Prepare Word Bag Function

private void PrepareWordBag()

{

for (var i = 0; i < sentences.Count(); i++)

{

var words = (sentences[i].Text.ExtractFeatures().FilterFeatures());

foreach (var t in

from t in words

let wrd = wordBag.FirstOrDefault(x => x.Word.ToLower() == t)

where wrd == null

select t)

wordBag.Add(new WordBagItem(t.Trim().ToLower(), wordBag.Count + 1));

}

}

and the extensions model for splitting and filtering words

Extensions

public static class Helper

{

public static IEnumerable ExtractFeatures(this string text)

{

return Regex.Replace(text, "\\p{P}+", "").Split(' ').ToList();

}

public static IEnumerable FilterFeatures(this IEnumerable list)

{

var filters = new[]

{

".", ",", "!", "?", ":", ";", "_", "+", "/", @"\", "*", "the", "of", "on", "is", "a", "...", "1", "2", "3",

"4", "5", "6", "7", "8", "9", "0", " ", "for", "an", "", "it", "she", "he", "they", "we", "them", "our",

"his", "her"

};

return list.Where(x => !filters.Contains(x)).ToList();

}

}

We need one more class for our dataset which we use for passing our dataset to the algorithm by using it.

public class NaiveBayesTextModel

{

[AiLabel]

public double Label { get; set; }

[AiField]

public double WordId { get; set; }

public string Word { get; set; }

}

AiLabel and AiField are the attributes which tell to the algorithm which field of the data needs to use as which kind of feature.

Now we are ready to feed the algorithm with the dataset which we have.

var dataSet = new List();

foreach (var t in sentences)

{

var words = t.Text.ExtractFeatures();

foreach (var t1 in words)

{

var naiveBayesTextModel = new NaiveBayesTextModel();

var firstOrDefault = wordBag.FirstOrDefault(x => x.Word == t1.ToLower());

if (firstOrDefault == null) continue;

naiveBayesTextModel.WordId = firstOrDefault.Id;

naiveBayesTextModel.Label = t.Category;

naiveBayesTextModel.Word = t1.ToLower();

dataSet.Add(naiveBayesTextModel);

}

}

Naive Bayes Multinomial Technique

Naive Bayes has several different techniques, in this example, we use 'Naive Bayes Multinomial' technique which is very pretty effective for text/document classification.

Multinomial Technique based on the calculation of some key features from the dataset and making the prediction by using them. The key features which we need for this technique are;

Probability of the Label (in our case positive or negative)

P(Positive) = Total Positive Words/ Total Words

P(Negative) = Total Negative Words/ Total Words

Probability of the Word According to Each Type of Comment (In our case positive or negative)

P(Word/Positive)= (Number of occurrence of the word in the all "Positive" + 1) / (Total number of the words in "Positive" + Total number of unique words in all the types)

"Word" term which is above can be any word from our dataset. Lets say we have a word as "Great", so when we want to calculate probability according to type we need to use "Great" word instead of the Word in the formula (in our case we use Id which we generate for each word).

Those are the features which our algorithm needs to for calculation for making predictions.As we already discussed how we can read the dataset, and then clean and finally generate bags of words, now we can start to the coding algorithm itself.

Now, we are ready to start implementation of Naive Bayes Multinomial.

The codes which you see below, belongs to one of the my open source machine learning library Ellipses.

As you can see below, we need to use Converter Class which converts our classes to the type of data which the algorithm can work with.

Anyone who needs Converter Class codes, he or she can download it from Ellipses project page. For the sake of simplicity, I am not going to give that codes here.

We start by the writing interface for our Naive Bayes class, this class is our main class which we can pass a different type of techniques to apply to the algorithm (In this article, we use multinomial technique, but our class is suitable for defining other techniques and use it according to new requirements.).

INaiveBayes

namespace Ellipses.Interfaces

{

public interface INaiveBayes

{

///

/// Load data set

///

/// Data set

/// Normalize data

void LoadDataSet(T[] models, bool normalization = false);

///

/// Trains model for prediction

///

INaiveBayesPredicter Fit();

}

}

NaiveBayes

/* ========================================================================

* Ellipses Machine Learning Library 1.0

* https://www.ellipsesai.com

* ========================================================================

*

* Copyright Ali Gulum

*

* ========================================================================

* Licensed under the Creative Commons Attribution-NonCommercial 4.0 International License;

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* https://creativecommons.org/licenses/by-nc/4.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

* ========================================================================

*/

using System.Collections.Generic;

using System.Linq;

using Ellipses.Helpers;

using Ellipses.Interfaces;

using Ellipses.Metrics;

namespace Ellipses.Algorithms.Nb

{

public class NaiveBayes : INaiveBayes

{

//Trainer

private readonly INaiveBayesAlgorithm _algorithm;

//Helper class for converting models

private readonly IConverter _converter;

//Normalizer

private readonly INormalizer _normalizer;

//Data set as double array list

private double[][] _dataSet;

//Data set as matrix

private Matrix _matrix;

//Labels as matrix

private Matrix _matrixLabel;

///

/// Naive Bayes

///

/// Algorithm for the naive bayes algorithm

/// Normalizer

/// Converter for the models

public NaiveBayes(INaiveBayesAlgorithm algorithm = null, INormalizer normalizer = null,

IConverter converter = null)

{

_algorithm = algorithm ?? new NaiveBayesBinaryAlgorithm();

_normalizer = normalizer ?? new Normalizer();

_converter = converter ?? new Converter();

}

///

/// Load data set

///

/// Data set

/// Normalize data

public void LoadDataSet(T[] models, bool normalization = false)

{

var isDimensional = _converter.IsDimensionalFieldExist(models);

_dataSet = _converter.ConvertModels(models);

if (normalization)

_dataSet = _normalizer.Normalize(_dataSet);

_matrix = new Matrix(_dataSet);

_matrixLabel = _converter.ConvertLabelsToMatrix(models);

if (isDimensional)

PrepareDimensionalData(_converter.GetDimensionalData(models, normalization));

}

///

/// Trains model for prediction

///

public INaiveBayesPredicter Fit()

{

//Calculate probabilities of the labels

_algorithm.ComputeProbabilityOfLabels(_matrixLabel);

//Calculate conditional probabilities of the features

_algorithm.ComputeProbabilityOfFeatures(_matrix, _matrixLabel);

//Prepeare and return trained model for prediction

var naiveBayesPredicter = _algorithm.GetPredictor();

return naiveBayesPredicter;

}

#region Helpers

///

/// Prepares dimensional data

///

/// Matrix of dimensional data

private void PrepareDimensionalData(Matrix dimensionalMatrix)

{

var matrixValues = new List();

var dimensionalMatrixValues = new List();

var newMatrix = new List();

var newLabelList = new List();

for (var dRow = 0; dRow < dimensionalMatrix.Rows; dRow++)

dimensionalMatrixValues.Add(dimensionalMatrix[dRow].ToArray());

for (var mRow = 0; mRow < _matrix.Rows; mRow++)

matrixValues.Add(_matrix[mRow].ToArray());

for (var dVal = 0; dVal < dimensionalMatrixValues.Count; dVal++)

{

newMatrix.Add(matrixValues[dVal].Concat(dimensionalMatrixValues[dVal]).ToArray());

newLabelList.Add(_matrixLabel[dVal].ToArray());

}

_matrix = new Matrix(newMatrix.ToArray());

_matrixLabel = new Matrix(newLabelList.ToArray());

}

#endregion

}

}

In the Naive Bayes class we get the dataset model and convert it to a dimensional array, and we call related function of the technique which is defined as the current technique to use, finally, we ask for the technique to generate predictor for feature predictions. In our case current technique is Multinomial Technique, so let's define it.

INaiveBayesAlgorithm

using Ellipses.Metrics;

namespace Ellipses.Interfaces

{

public interface INaiveBayesAlgorithm

{

///

/// Computes probability of labels

///

/// Referance of Label Matrix

void ComputeProbabilityOfLabels(Matrix labelMatrix);

///

/// Computes probability of features

///

/// Matrix of data

/// Referance of Label Matrix

void ComputeProbabilityOfFeatures(Matrix matrix, Matrix labelMatrix);

///

/// Returns predictor for the algorithm

///

INaiveBayesPredicter GetPredictor();

}

}

NaiveBayesMultinomialAlgorithm

/* ========================================================================

* Ellipses Machine Learning Library 1.0

* https://www.ellipsesai.com

* ========================================================================

*

* Copyright Ali Gulum

*

* ========================================================================

* Licensed under the Creative Commons Attribution-NonCommercial 4.0 International License;

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* https://creativecommons.org/licenses/by-nc/4.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

* ========================================================================

*/

using System;

using System.Collections.Concurrent;

using System.Linq;

using System.Threading.Tasks;

using Ellipses.Helpers;

using Ellipses.Interfaces;

using Ellipses.Metrics;

using Ellipses.Models;

namespace Ellipses.Algorithms.Nb

{

public class NaiveBayesMultinomialAlgorithm : INaiveBayesAlgorithm

{

private const double TOLERANCE = 0.1;

//Helper for the various operations

private readonly IHelper _helper;

//Probabilities of the features

private ConcurrentBag _featureProbabilities;

//Matrix of labels

private Matrix _labelMatrix;

//Probabilities of the labels

private ConcurrentDictionary _labelProbabilities;

public NaiveBayesMultinomialAlgorithm()

{

_helper = new Helper();

}

///

/// Computes probability of labels

///

/// Referance of Label Matrix

public void ComputeProbabilityOfLabels(Matrix labelMatrix)

{

var labelProbabilities = new ConcurrentDictionary();

var labelList = labelMatrix.GetRows().Select(x => x[0]).Distinct().ToList();

for (var i = 0; i < labelList.Count(); i++)

{

var lbl = labelList[i];

var tLbl = labelMatrix.GetRows().Where(x => Math.Abs(x[0] - lbl) < TOLERANCE).ToList().Count();

var pLabel = (double) tLbl/labelMatrix.Rows;

labelProbabilities.TryAdd(lbl, pLabel);

}

_labelProbabilities = labelProbabilities;

}

///

/// Computes probability of features

///

/// Matrix of data

/// Referance of Label Matrix

public void ComputeProbabilityOfFeatures(Matrix matrix, Matrix labelMatrix)

{

var featureProbabilities = new ConcurrentBag();

//Connect matrix

var connectedMatrix = matrix.ConnectMatrix(labelMatrix);

var labelList = labelMatrix.GetRows().Select(x => x[0]).Distinct().ToList();

var featureLength = connectedMatrix.Cols;

var matrixRows = connectedMatrix.GetRows();

var vectors = matrixRows as Vector[] ?? matrixRows.ToArray();

Parallel.For(0, labelList.Count, l =>

{

Parallel.For(0, connectedMatrix.Rows, i =>

{

var fTotalAccordingToLabel =

vectors.Count(x => Math.Abs(x[featureLength - 1] - labelList[l]) < TOLERANCE);

var lUnique = connectedMatrix[i, featureLength - 1];

Parallel.For(0, matrix.Cols, j =>

{

var f = connectedMatrix[i, j];

var fTotal =

vectors.Count(

x =>

Math.Abs(x[featureLength - 1] - labelList[l]) < TOLERANCE &&

Math.Abs(x[j] - f) < TOLERANCE);

var fUniqueTotal = matrix.Select(x => x[j]).Distinct().ToList().Count;

var fProbability = ((double) fTotal + 1)/((double) fTotalAccordingToLabel + fUniqueTotal);

var probability = new NaiveBayesProbability

{

FeatureIndex = j,

FeatureProbability = Math.Abs(fProbability),

Feature = f,

FeatureTotal = fTotal,

Label = labelList[l],

LabelUnique = lUnique

};

featureProbabilities.Add(probability);

});

});

});

_labelMatrix = labelMatrix;

_featureProbabilities = featureProbabilities;

}

///

/// Returns predictor for the algorithm

///

public INaiveBayesPredicter GetPredictor()

{

return new NaiveBayesMultinomialPredicter(_labelProbabilities, _featureProbabilities, _labelMatrix);

}

}

}

and Predictor Interface and Class

NaiveBayesPredicter

using System.Collections.Generic;

using Ellipses.Models;

namespace Ellipses.Interfaces

{

public interface INaiveBayesPredicter

{

///

/// Predicts according to model

///

/// Model for prediction label

double Predict(T model);

///

/// Predicts according to model and return all probabilities for all labels

///

/// Model for prediction label

List PredictWithProbabilities(T model);

}

}

NaiveBayesMultinomialPredicter

/* ========================================================================

* Ellipses Machine Learning Library 1.0

* https://www.ellipsesai.com

* ========================================================================

*

* Copyright Ali Gulum

*

* ========================================================================

* Licensed under the Creative Commons Attribution-NonCommercial 4.0 International License;

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* https://creativecommons.org/licenses/by-nc/4.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

* ========================================================================

*/

using System;

using System.Collections.Concurrent;

using System.Collections.Generic;

using System.Linq;

using System.Threading.Tasks;

using Ellipses.Helpers;

using Ellipses.Interfaces;

using Ellipses.Metrics;

using Ellipses.Models;

namespace Ellipses.Algorithms.Nb

{

internal class NaiveBayesMultinomialPredicter : INaiveBayesPredicter

{

private const double TOLERANCE = 0.1;

//Helper class for converting models

private readonly IConverter _converter;

//Probabilities of the features

private readonly ConcurrentBag _featureProbabilities;

//Probabilities of the labels

private readonly ConcurrentDictionary _labelProbabilities;

//Labels

private readonly List _lbls;

///

/// Naive Bayes Predicter

///

/// Probability set for the labels

/// Probability set for the features

/// Matrix of label

public NaiveBayesMultinomialPredicter(ConcurrentDictionary labelProbabilities,

ConcurrentBag featureProbabilities, Matrix labelMatrix)

{

_converter = new Converter();

_labelProbabilities = labelProbabilities;

_featureProbabilities = featureProbabilities;

_lbls = labelMatrix.GetRows().Select(x => x[0]).Distinct().ToList();

}

///

/// Predicts according to model

///

/// Model for prediction label

public double Predict(T model)

{

return GetPrediction(model).Aggregate((l, r) => l.Value > r.Value ? l : r).Key;

}

///

/// Predicts according to model and return all probabilities for all labels

///

/// Model for prediction label

public List PredictWithProbabilities(T model)

{

return

GetPrediction(model).Select(v => new NaiveBayesResult {Label = v.Key, Probability = v.Value}).ToList();

}

///

/// Predicts according to model and return all probabilities for all labels

///

/// Model for prediction label

private ConcurrentDictionary GetPrediction(T model)

{

var probabilities = new ConcurrentDictionary();

var modelConverted = _converter.ConvertModel(model);

var dimensionalProcess = _converter.IsDimensional(model);

if (dimensionalProcess)

{

var newList = new List();

var dimensionalFeatures = _converter.ConvertDimensionalModel(model);

var orjModelValues = modelConverted.Select(t => t.ToArray()).ToList();

for (var dimensionalRow = 0; dimensionalRow < dimensionalFeatures.Length; dimensionalRow++)

newList.AddRange(orjModelValues.Select(t => t.Concat(dimensionalFeatures[dimensionalRow]).ToArray()));

modelConverted = newList.ToArray();

}

Parallel.For(0, _labelProbabilities.Count, l =>

{

var label = _labelProbabilities.Keys.ElementAt(l);

var fTotalAccordingToLabel =

_featureProbabilities.Where(x => Math.Abs(x.LabelUnique - label) < TOLERANCE).Distinct().Count()/

_labelProbabilities.Count;

var totalDot = 1.0;

Parallel.For(0, modelConverted[0].Count(), i =>

{

var f = modelConverted[0][i];

var fVal =

_featureProbabilities.FirstOrDefault(

x => Math.Abs(x.Feature - f) < TOLERANCE && Math.Abs(x.Label - label) < TOLERANCE);

if (fVal != null)

{

totalDot *= fVal.FeatureProbability;

}

else

{

const int fTotal = 0;

var fUniqueTotal = _featureProbabilities.Select(x => x.Feature).Distinct().ToList().Count;

var fProbability = ((double) fTotal + 1)/((double) fTotalAccordingToLabel + fUniqueTotal);

totalDot *= fProbability;

}

});

var probability = _labelProbabilities[label]*totalDot;

probabilities.TryAdd(label, probability);

});

return probabilities;

}

}

}

Now we are ready put all together and use Naive Bayes Algorithm with Multinomial Technique.

var naiveBayes = new NaiveBayes(new NaiveBayesMultinomialAlgorithm());

naiveBayes.LoadDataSet(dataSet.ToArray());

var predicter = naiveBayes.Fit();

So what do we do in general?

1. We prepare and clear dataset.

2. We generate unique ids for each word.

3. We pass them to the algorithm.

4. The algorithm converts models to numerical arrays which it can make probability calculations.

5. Algorithm applies to rule through to the data which we already defined here

6. Return predictors which we can use for making predictions.

Ok, let's check the result in the action. Let's ask for the algorithm to make a prediction for the sentence "It is a really great product, I like it!"

Below you can find the codes which we use for passing new sentence or sentences to the fitted model which makes predictions.

var sentences = new SentenceModel("It is a really great product, I like it!");

var words = (sentences.Text.ExtractFeatures());

var values = new List();

var naiveBayesTextModel = new NaiveBayesTextTextModel();

foreach (var t in words)

{

var wrd = wordBag.FirstOrDefault(x => x.Word.ToLower() == t);

if (wrd == null)

wordBag.Add(new WordBagItem(t.Trim().ToLower(), wordBag.Count + 1));

var firstOrDefault = wordBag.FirstOrDefault(x => x.Word == t.ToLower());

if (firstOrDefault == null) continue;

values.Add(firstOrDefault.Id);

}

naiveBayesTextModel.Values = new double[1][];

naiveBayesTextModel.Values[0] = values.ToArray();

var res = predicter.PredictWithProbabilities(naiveBayesTextModel);

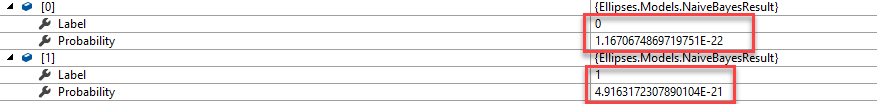

Here what we get;

As you can remember "0" represents "Negative" comment and "1" represents "Positive" comment, as you can see above, algorithm returns "Positive" label with higher probability.

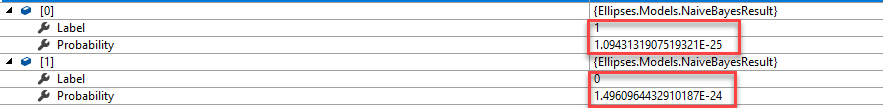

Let's try another example;

for the sentence "It doesn't cost, exactly terrible, I don't recommend it."

Pretty good! As you can see above, the Negative label has the higher probability than the Positive one.

Before finishing, I want to specify it one more time that Naive Bayes is really useful machine learning algorithm, and Multinomial Naive Bayes Technique is very effective for text/document classifications. You can improve the example which we did here by adding additional labels such "Neutral", that time you will need to check the highest probability in the result to decide prediction of the algorithm.

Thanks for reading.